NVIDIA ConnectX-7 1.6Tbit/s Cedar Fever Module vs ConnectX-7 PCIe form factor

BoM Cost Analysis & Tradeoff between Cedar Fever and PCIe form factor Connect-7, Install Base of Each version

Welcome to our latest analysis where we dive into NVIDIA's cutting-edge 1.6Tbit/s ConnectX-7 Cedar Fever module vs ConnectX-7 PCIe form factor. This blog will break down the Bill of Materials (BoM) cost and analyze the difference between why Nvidia DGX H100 and some hyperscaler like Microsoft Azure choose using Cedar Fever module while others such as most of the OEMs like HPE, Dell, Supermicro and hyperscalers like Meta choose to stay with the same PCIe ConnectX-7 form factor. We analyze public photos of every HGX chassis to determine if they are using Cedar Fever Module.

Disclaimer: only publicly available information will be used and analyzed within this blog post.

400Gbit/s ConnectX-7 PCIe Form Factor

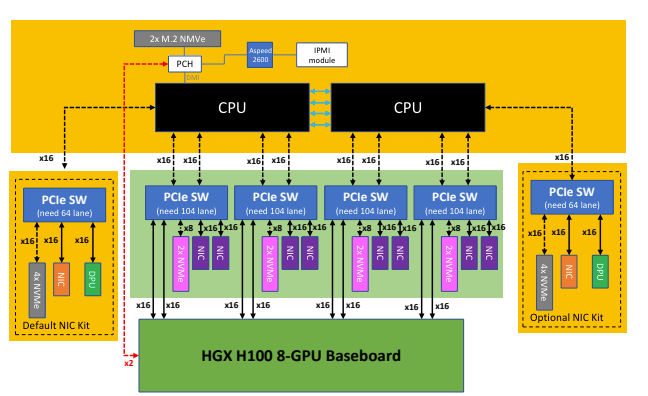

We are all familiar with the PCIe form factor of ConnectX-7 which supports 400Gbit/s with the standard HGX H100 server containing 8 of them for the compute fabric. Each ConnectX-7 NIC and its own OSFP cage and 400G OSFP transceiver. On the other end, there is a 2x400G Twin-Port OSFP transceiver, so for an HGX H100 server to connect to all the leaf switches, it requires four 2x400G Twin-Port OSFP transceiver and eight 400G OSFP transceiver for a total transceiver count of 12.

Each H100 is directly connected to a CX-7 NIC through a Broadcom PCIe SW such that it can directly RDMA to it throughout going through the CPU or the PCIe root complex.

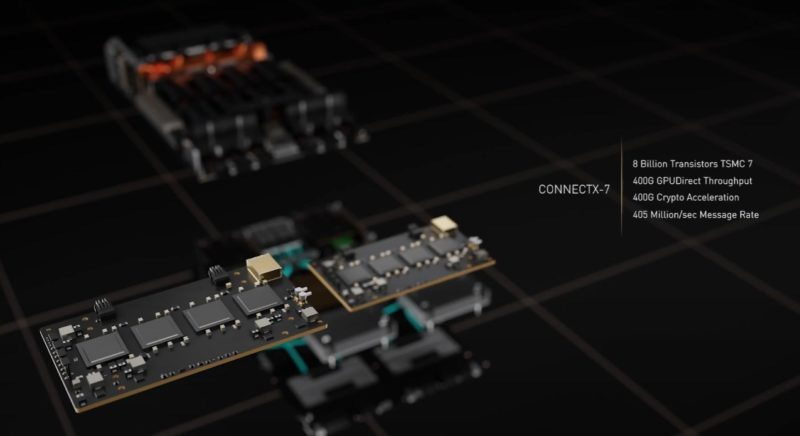

1.6Gbit/s Cedar Fever Module

Each Cedar Fever Module contains four custom version of 400Gbit/s ConnectX-7 ICs which also acts as an PCIe switch to the GPUs. Since each Cedar Fever module only has 1.6Gbit/s of bandwidth, each DGX H100 node requires 2 “Cedar-7” cards.

The main benefit of using Cedar Fever module is that it allows for just using 4 OSFP cages instead of 8 OSFP cages thus allowing for the use of twin port 2x400G transceivers on the compute node end too, instead of just the switch end. This reduces the transceiver count to connect to the leaf switches from 12 transceivers to 8 transceivers per H100 node.

Cost Analysis

Per Scalable Unit (SU) of 256 H100s, by switching to Cedar Fever Module, you save close to 900k on transceiver cost. This moves transceiver cost from being 44.74% of the Infiniband compute fabric BoM to 37.93%.

In a typical hyperscaler deployment of 24,576 H100s (96 SUs), this would mean a cost savings of $86.4mil on transceiver costs. This is why the largest customer of h100s, Microsoft Azure, chooses to use Cedar Fever modules. When you are deploying over 500k H100 per year, that translates to a cost saving of $1.75Billion/year.

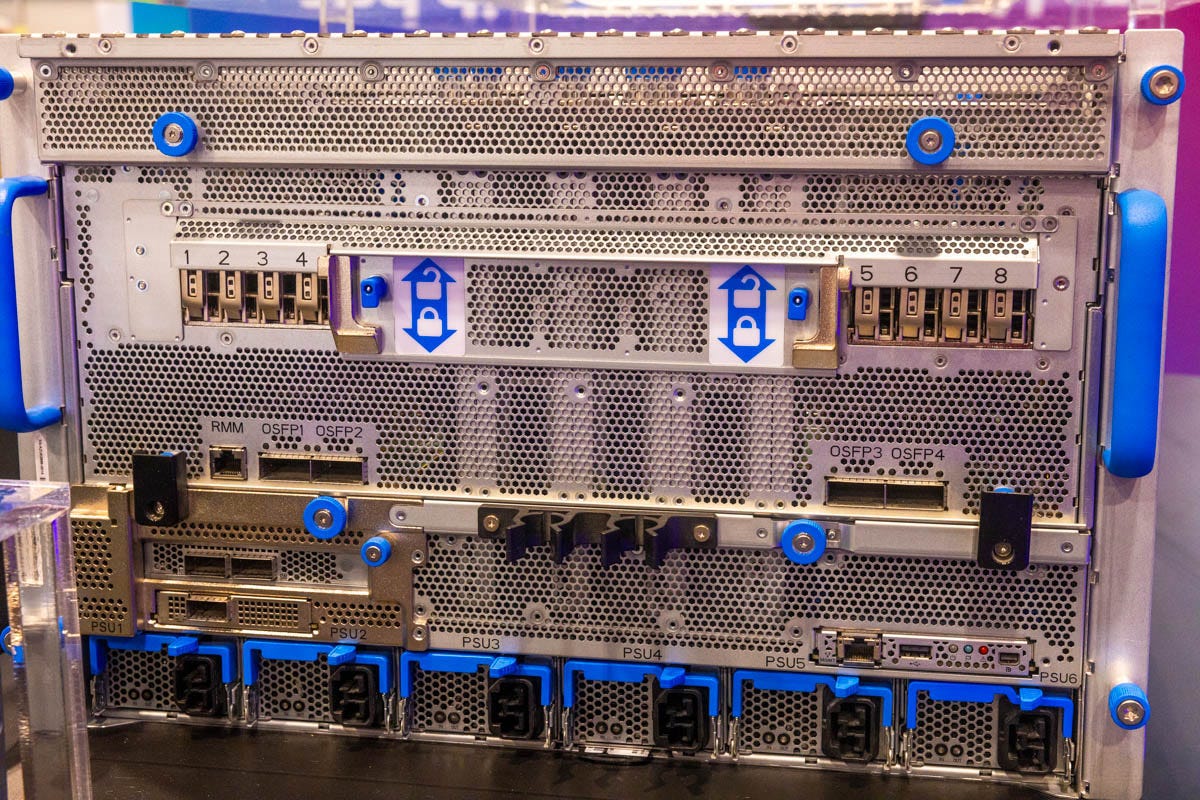

Customer Install Base Analysis

The two main chassis that use Cedar Fever is the NVIDIA’s DGX chassis and Microsoft Azure HBv5 H100 chassis. You can analyze this by seeing that chassis that use Cedar Fever only have 4 OSFP cages for the GPU compute fabric as oppose to 8 OSFP cages. There is also power savings and cooling advantages of having 4 OSFP cages instead of 8 OSFP cages. One of the disadvantages of using Cedar Fever is that Twin Port 2x400G LinkX Infiniband transceivers are way more in demand than than 400G transceivers. But maybe NVIDIA will give you kudo points for buying more of these reference things so they may not matter.

Here are the OEMs and major hyperscalers that don’t use Cedar Fever module and just stay with the standard ConnectX-7 PCIe form factor:

Meta Grand Teton

Supermicro SYS-821GE-TNHR (and AMD equivalent)

HPE Cray XD670

Dell PowerEdge XE9680

Gigabyte G593-SD0 (and AMD equivalent)

basically all the other OEMs

Source: OEM's website and Meta OCP website

Conclusion

In this post, we have analyze why the largest H100 customer, Microsoft Azure, uses Cedar-7 Fever modules instead of the the standard ConnectX-7 PCIe form factor. We have also analyze the massive cost advantage slight power and cooling advantage of Cedar-7 Fever module. Furthermore, we have analyzed all the major OEM/ODM hyperscaler chassis to see who is using Cedar-7 Fever module.